The Reason I think that I found this fun.

I’ve always been interested in things that produce unexpected results. Even better if its something that can surprise me as the creator. Think the idea of AI deciding to protect humanity by destroying it but hopefully less evil.

This project also had the advantage of being reactive to the environment so it provides a snapshot/signature of the brightness of its environment.

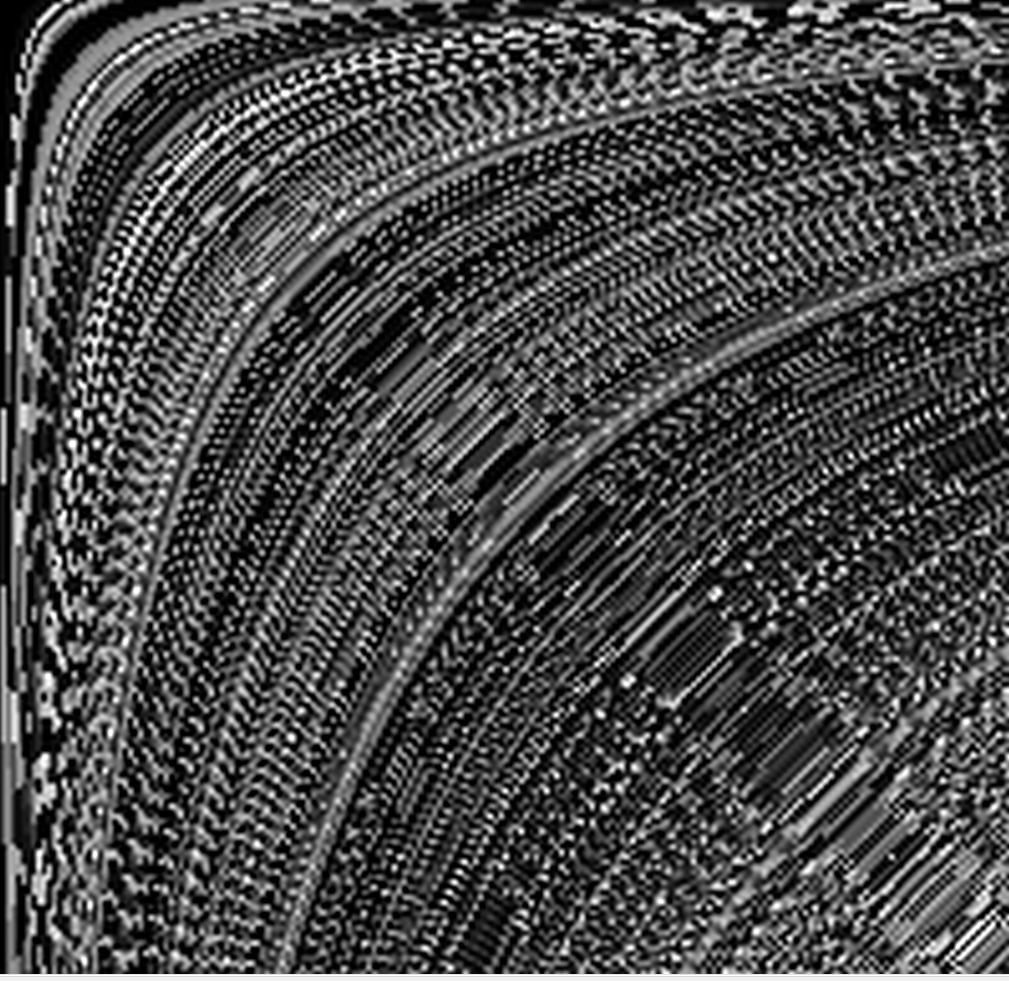

The other aspect that was enjoyable was how it looked like old school / footage where fittingly there’s a lot of latency or low bandwidth. (Like space footage and or old cameras).

The part before the fun pictures.

This setup was split between two pieces.

An Arduino in a shoe-box with a photoresistor and a LED. The Arduino reads directly from the serial communication and sets the LED’s brightness, then returns the photo resistors output to the serial.

A Python application that reads and writes from the serial, it basically is sending information to itself in a way that is not a. efficient, b. accurate, but it is interesting. These are of course not mutually exclusive.

I could control the speed the LED flashed, and how the photo-resistor decided to handle the brightness.

Running the brightness values of a black and white image produced a lot of fun imagery.

If you want to see a real neat example of what it can produce here is an animation with its “staticbox” equivalent to its side. I added some background music to enhance its otherwordlyness.

The part with the fun pictures

Text tilted to the side! Here I was just seeing what I could get away with in terms of resolution/refresh rate.

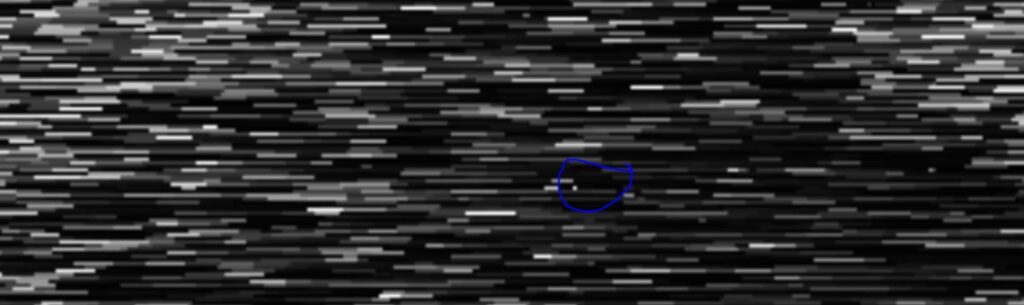

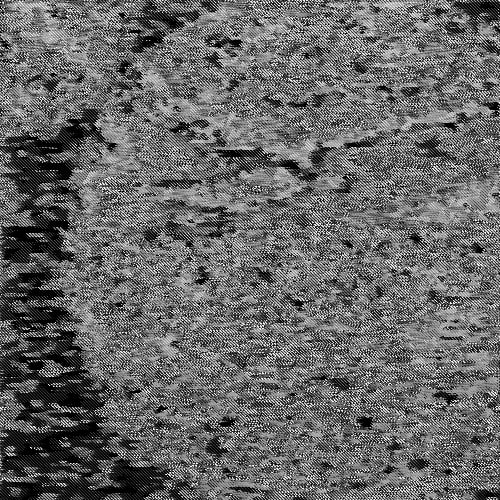

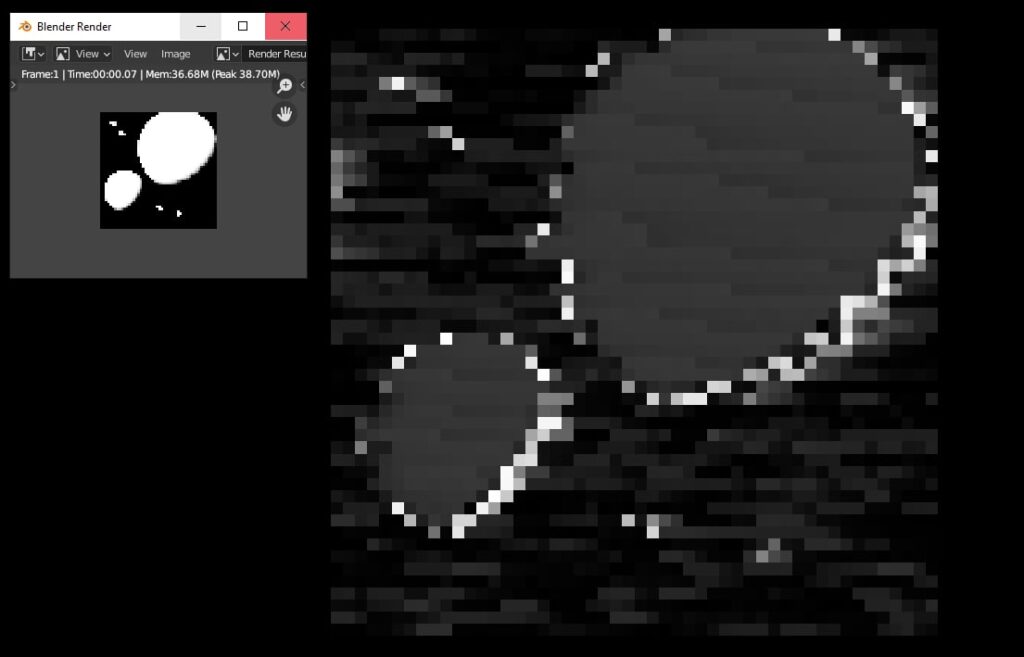

A comparison of the render on the right, and the output on the left. The smearing is when there wasn’t enough time for the photo-resister/LED setup to cleanly get a pixel so it was dragging. This carries over into the edges where you can see bleed of the image from the right to the left.

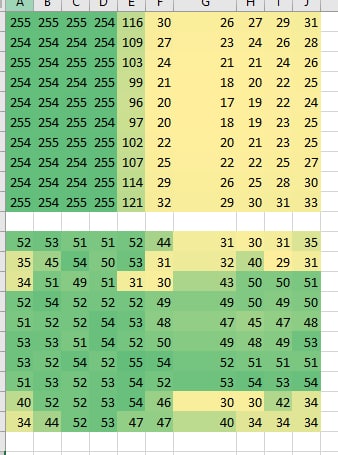

I ran into some weird issues where I was getting values I wasn’t expecting. (Issue with orignal data not my actual program)

So I was able to import the data into excel to try to get an idea of what was going on.

Because it involved math I had some minor problems with getting the recode to function correctly. It still looked neat but was not the circle I was hoping for.

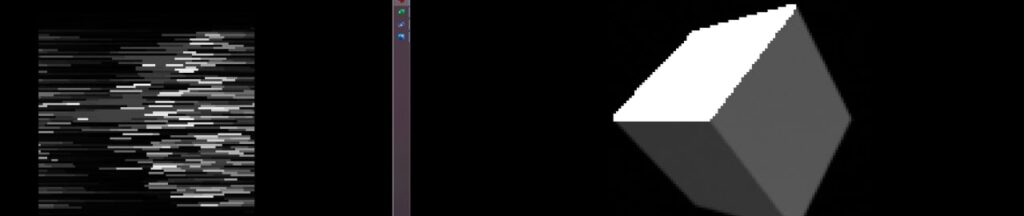

Thankfully since I was using a program like blender to generate my orignal images. I didn’t have to worry about rescaling or not having enough pixels for a larger image.

Another example of a source image and its output.

Ideas I had after this:

- Increase transfer/baudrate so I can have faster results and iteration time.

- Make a blender plugin that lets me hit “Render in Staticbox” and then have it sent to my python application. It would hep me by killing off a step and just making it easier to experiment.

- Make something that lets me record my daily light exposure so I can make an image based off it.

- Introduce different kinds of distortions, like having several going at once, so the interactions are unpredictable.

- Make a data driver that just corrupts information. I would love to be able to send a file through it and basically replace one of the OSI Model’s layers.